Enhancing Case Classification and Routing with Tone and Sentiment Analysis using Salesforce Einstein Generative AI, Apex, and Flow - Part 1

The customer service industry relies on effective case classification and routing capabilities to enhance agent productivity, minimize case transfers and escalations, speed up issue resolution, and maintain consistent data quality.

To achieve this, Salesforce Einstein for Service uses a predictive model based on historical case data and machine learning to boost productivity. However, setting it up can become complex, and the system's accuracy relies heavily on high-quality data, requiring regular data management.

In contrast, Large Language Models (LLMs) provide developers and admins with new opportunities to create innovative applications on the Salesforce platform. LLMs can reduce development effort and data dependency while achieving high accuracy. With the new Generative AI and prompt builder on the platform, leveraging LLMs on Salesforce has become easier and more accessible.

I’ve created a blog series of two posts to explore a sample app I built. The idea is to demonstrate how to leverage Salesforce’s Einstein Generative AI Prompt template with Apex and Flow for effective case prioritization, classification, and routing. In the first blog, I’ll dive into the end-user experience with a demo video. Then, in the second blog, I’ll explore into implementation details

First, I’ve outlined the goals to achieve:

Case prioritization - Using pre-defined custom rules, prioritize cases based on the customer’s tone and sentiment.

Case classifications - Leverage LLMs to classify cases into different categories for routing, along with providing reasoning for each classification.

Improve the quality of the case insights - Identify the nature of the problem to ensure that even unconventional customer communications are interpreted correctly, enhancing data quality and case handling efficiency.

Test use case - Prioritization:

Input:

Case Subject: Billing statement incorrect

Case description: My statement is incorrect - I have been billed twice for this month. the same issue happened last month, I want this to be solved as soon as possible

Outcome:

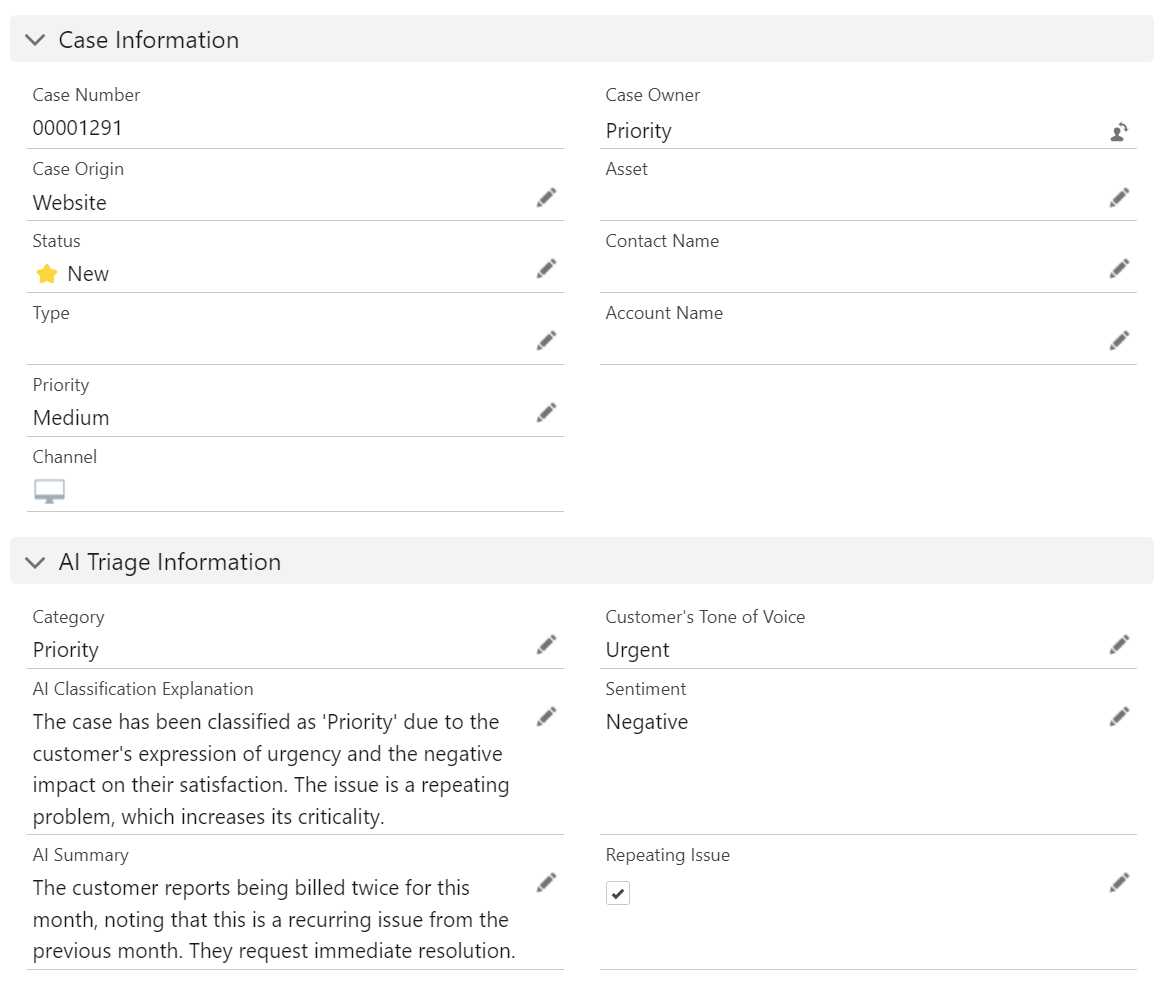

Once the case is created with the provided information, LLM automation takes over to classify and route it. You can see the results in the screenshot of the newly created case below. The case has been prioritized due to customer dissatisfaction and reoccurrence, and all AI-generated information is available in the AI Triage section.

This demonstrates how Generative AI not only prioritizes but also provides context, making it easier for support teams to respond effectively.

Test use case - Classification:

Input:

Case Subject: Product damaged, mishandled during shipping.

Case description: When my order arrived, the corner of the box was crushed, and the product had visible damage to it. How would I return this to get a new one?

Outcome:

The case is categorized as Customer Service because it requires assistance, see the details below

Generative AI successfully interprets the issue type and provides an appropriate classification, saving time and improving accuracy.

Test use case - Case Insights :

Input:

Case Subject: hair dryer malfunctioning after AC power switch; concerns about 'cold fire' issue.

Case description: when I switched AC power my hair dryer only blowing out 'cold fire' and fears it might be dragon-breathed. Can I ask for a 'mythical creature handler? :)

Outcome:

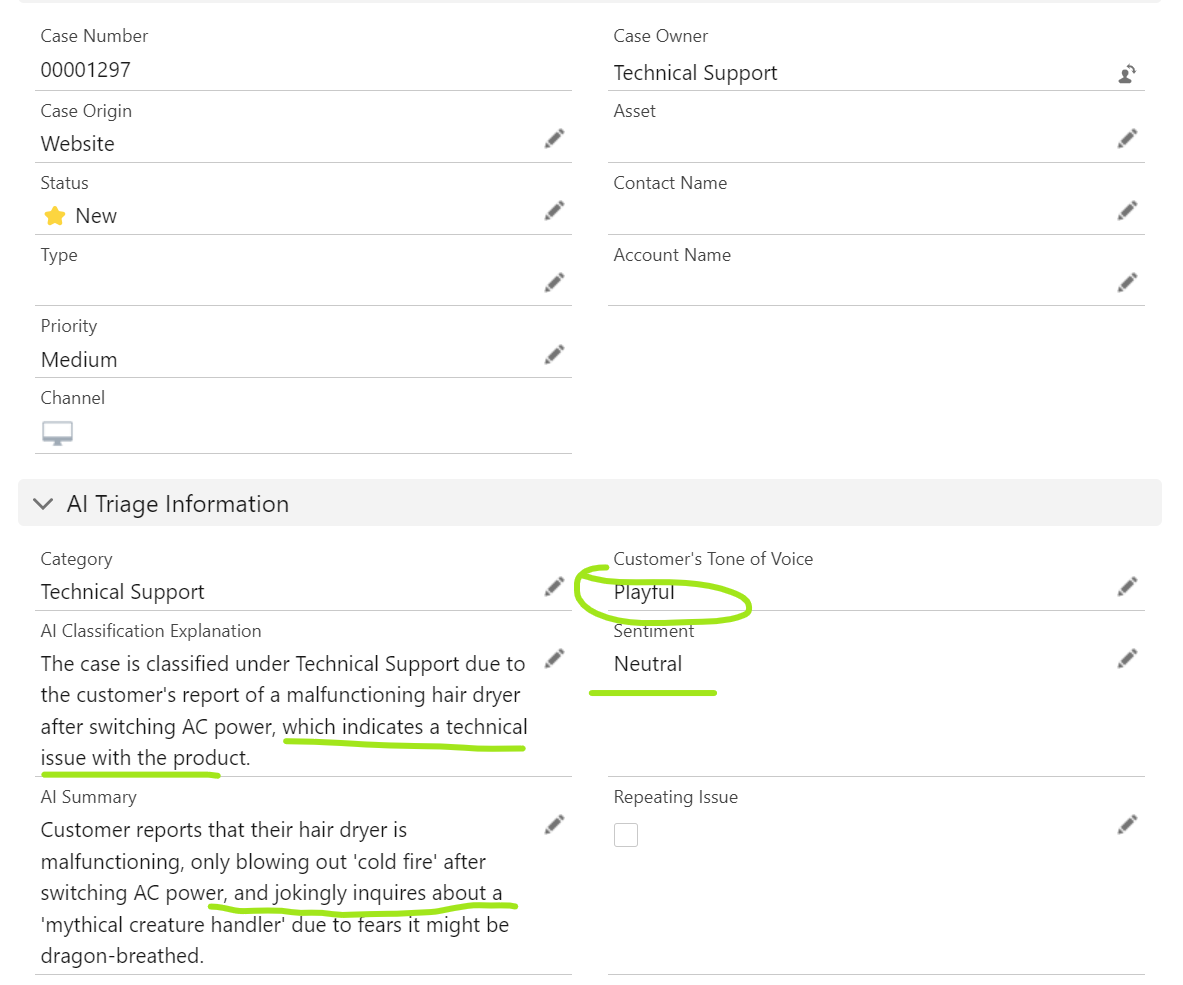

The case is categorized as Technical Support because it is a technical issue related to the product.

It indicates the customer is using humor to describe the issue but does not alter the nature of the problem that their hair dryer is malfunctioning, This insight enhances the overall quality of information captured in the case

This capability ensures that even unconventional customer communications are interpreted correctly, enhancing data quality and case handling efficiency.

Generative AI can significantly enhance the early stages of the case support process by providing valuable information and assistance. Despite the rapid advancements in AI, keeping humans in the loop remains crucial to leveraging Responsible AI for achieving the best outcomes. Having said that, I'm excited about the opportunities provided by Einstein Generative AI to spark more innovations on the platform. The productivity gains for both end users and developers are immense. In my next blog post, I'll dive into implementation details and share a sample code repository. Improving data quality in our organization will be my next focus to further boost prompt performance. Your questions and suggestions are always welcome! Please feel free to contact me.